As a backend developer, we alaways want our app work well in all condition even being “rapped” by someone. Race condition is a common problem that we need to handle in a real life application. In this blog, let clear what’s race condition and how to avoid it.

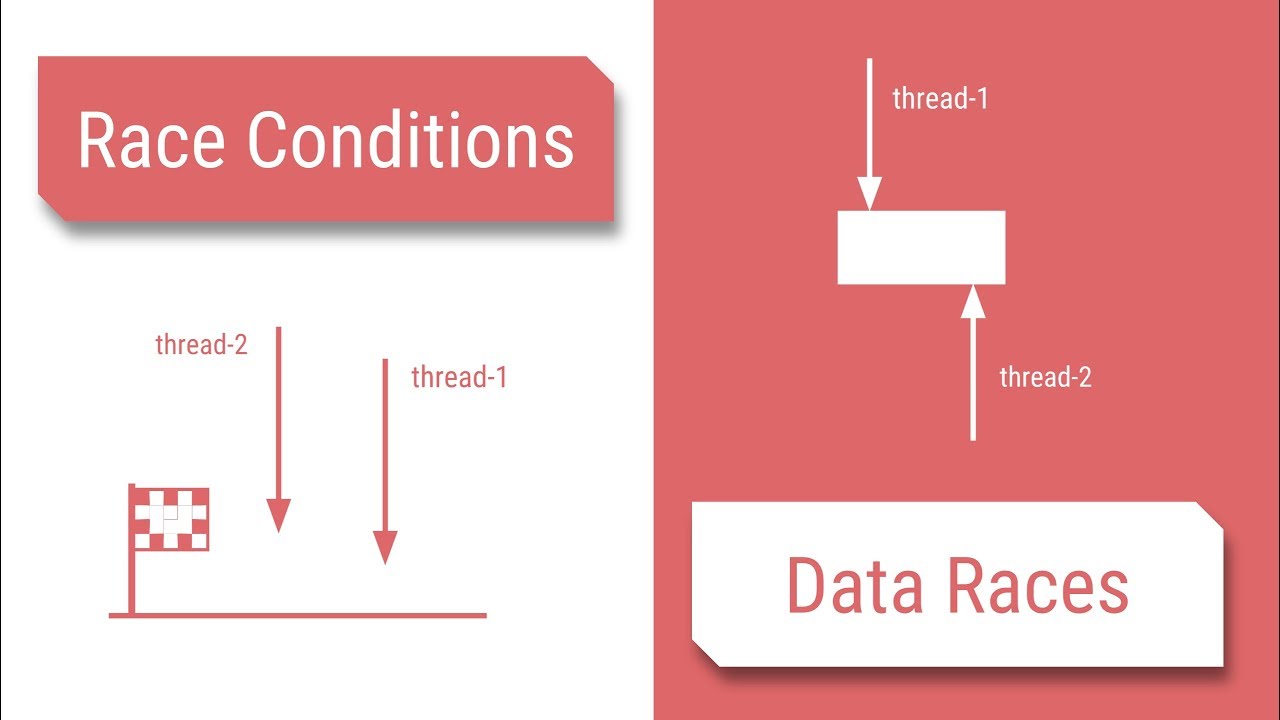

1. WTF is Race Condition ?

- Race condition happens when multi process try to read and update a public resource (ex: database) which can cause a process can read an old version of this resource then update wrong state.

- Take a real world example. Let’s say you’re building a bank application which have the withdraw api. Which will check the user balance in database then decrease the balance by the amount that user input in. Things’ll be simple and nothing to worry about when we just have one request per time. Let’s say we have about 5 requests to withdraw 100$ at a time to this api. In some BE architect eg Java each request will run simultaneously in a thread let call each thread is a process. Then now we have 5 process running the code to withdraw. Each process first try to query the database to check balance then deduct it. But things now went wrong, Because these 5 process read database’s state at the same time so they get 100$ balance which is a valid balance then transfer money to user. So user have 100% balance can withdraw 500$ :)))) due to race condition

2. But wait a sec…. Why we need to care about it while nodeJs is single-threaded by nature ?

- Hmm so race condition happens because some backend technologies use multi-thread to process htpp request (in term of api development). So the question is: how about the god nodeJS :v a single thread backend service. It make sens that we do not to care about race condition while using nodeJs because it single thread right?. Actually our beloved nodeJs still can cause race condition dues to it’s async metrics (event loop). You can read more here: https://www.nodejsdesignpatterns.com/blog/node-js-race-conditions/

- With Nodejs we can use mutex to make multi process to a share ressource. I’ll write another blog about mutex later. But actually mutex is not a best solution because well we scale our infracture eg 2 running instance of BE server which read same database the race condition can still occurre through instances

- So the solution that i provide here is using message queue: Message queue is mechanism the service comminucate each other through a queue via the messages go through this queue. And we can set concurrency of the queue to 1 means that 1 message of a queue can only be processed at at time even we have multi instances of running BE service if they using same queue only one message will be delivered to process at one time.

- One thing to notice that the message queue like redis, rabitmq,… is not just for handling the race condition. It can still delivre multi message to multi instances at a time (the nature of the message queue) to comminicate between multi services or to devide the compicated tasks to multi worker to completed that,… More about message queue i’ll write another blog

- So we must try way to config our queue to just process ONE MESSAGE PER TIME to avoid race condion

3. Ok let’s destroy the race condtion with Redis

- Redis is a in-memory key-value database, it quick it easy to use. We can use it for caching, storing data and of course in this blog, for message- queue. Belongs redis we can user rabitmq or mqtt but i like redis cause i simple. Rabitmq and it’s concepts are too compicated for just handle race condition :)))

- For redis you can install it to your local machine or using a hosted redis on cloud for this scope of this blog i dont explain how to use, how to install redis just forcus on how to use it to avoid the race condition

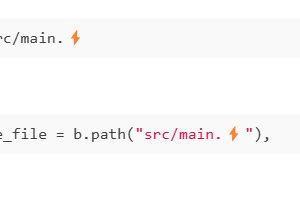

- Ok let’s code, First we’ll use bee-queue package which is a messsage queue system for nodejs using redis js in the background

npm i bee-queue- Just skip these others things and focus on the implementation only. You can read more about bee-queue here: https://github.com/bee-queue/bee-queue

import Queue from "bee-queue";

const beeQueueConfig = {

prefix: 'handle_race_condion',

stallInterval: 5000,

nearTermWindow: 1200000,

delayedDebounce: 1000,

redis: {

host: process.env.REDIS_HOST,

port: +process.env.REDIS_PORT,

db: 0,

options: {},

},

isWorker: true,

getEvents: true,

sendEvents: true,

storeJobs: true,

ensureScripts: true,

activateDelayedJobs: true,

removeOnSuccess: false,

removeOnFailure: false,

redisScanCount: 100,

autoConnect: true,

};

//create a queue

const withDrawQueue = new Queue('with-draw-queue', beeQueueConfig);

// tell bee queue to process the queue with concurrency set to 1

withDrawQueue.process(1, handleWithDraw);

//the handle function just a psuesdo code

const handleWithDraw = async (job: any) =>{

//get data from message

const {userId, amount} = job;

try {

let balance = await database.getBalance(userId);

if(balance < amount) {

throw new Error("Invalid Balance")

}

balance -= amount

await database.updateBalance(userId, balance);

await transferMoney(userId);

return "Success";

}

}

//the api controller function ex POST /withdraw will call to this function

//create job and wait until job done then return JSON via http

async withDrawSingleProcess(body: {userId: number, amount: number}) {

const createdJob = await twithDrawQueue.createJob(body).save();

const jobPromise = new Promise((resolve, reject) => {

createdJob.on('succeeded', (result) => {

resolve(result);

});

createdJob.on('failed', (err) => {

reject(err);

});

});

return await jobPromise;

}- Ok a quick explanation :v Fist we need an object for config bee-queue (redis server, redis port, etc) then we create a queue and config it to run a hanlde function with concurrency is 1 that means all message can be process 1 message at a time withDrawQueue.process(1, handleWithDraw);

- Write the logic for your job by normal. This function can return a Promise if this Promise resolve then the job will be succeeded or reject will be failed

- At the api hanlde method we add new message to the create queue by calling the createJob function this function will return a refer to the createdJob. Thing can be done here but if we want to wait till the task done then return to user the response (success or not) we can listen to job event on successded or on failed in a promise then await this promise to return

const createdJob = await twithDrawQueue.createJob(body).save();

const jobPromise = new Promise((resolve, reject) => {

createdJob.on('succeeded', (result) => {

resolve(result);

});

createdJob.on('failed', (err) => {

reject(err);

});

});

return await jobPromise;- Ok i don’t want to explain detail how to use bee-queue,…or give the full example code because base on BE framework (express, hono, elysia,…) we can implement by various way. But the core is we set concurrency to 1 when we create queue. And tada dont need to scare the race condion.

- We can test our endpoint by some tool like apache benchmark to make hundred or thounsand of request to our api endpoint at a time and check that is race conditons happens. GOOD LUCK.

Conclusion

- Develop a BE can be very easy for a simple CRUD application but alaways keep in mind that when BE went wrong you lose a lot of money and all thing’ll go up side down :)))). Race condition is a prolem that we need to concern specially, when we work with some thing like money,… Bad people always want to cheat money from you :v

- My implementation maybe not the best but it works for me Im sure that someone have better implementation Hope this blog can help you dont lose money :v

- Thanks for reading

__Coding cat 2024 with love <3__