Yes it’s hard but when you master the concept underlying this things gonna make senses. Networking is always a challenge with developers now a day. Because we working with high level abstraction. That why a developer like me when move to devops world networking is something really weirdo :)))

Ok, if you like me let’s explore from scratch the k8s network world and see in real world application how can we use the k8s service to deploy a web app.

Table contents

- Part 1: My first time deploy a web to a aws cloud service

- Part 2: Docker and containerization

- Part 3: K8s and the new world of container orchestration

- Part 4: Deploy your express application to k8s

- Part 5: Networking with K8s is f***ing hard

- Part 6: From the cloud to ground: Physical server setup for a private cloud

- Part 7: From the cloud to ground: Install Ubuntu Server and Microk8s

- Part 8: From the cloud to ground: Harvester HCI for real world projects

- Part 9: From the cloud to ground: Private images registry for our private cloud

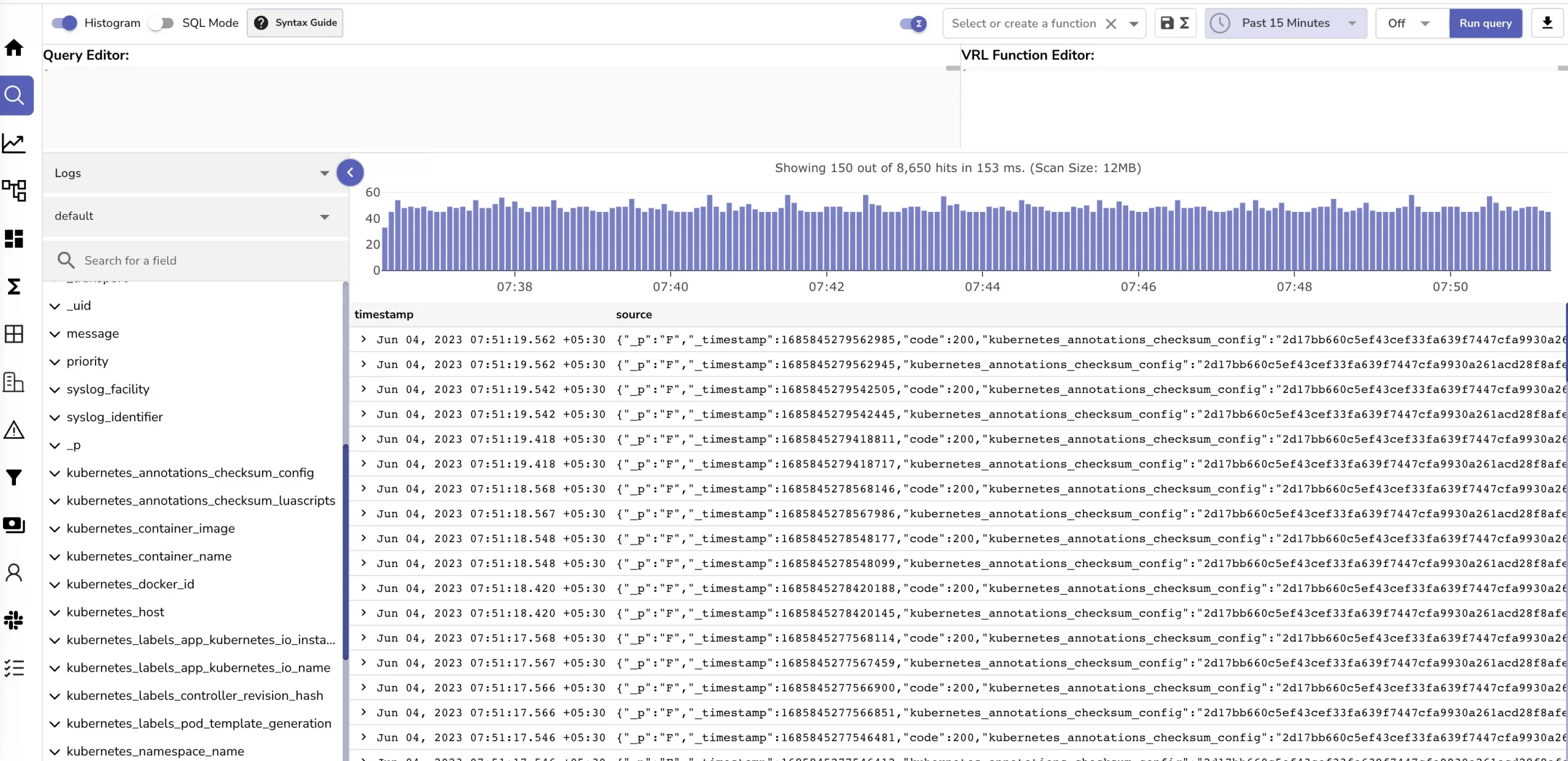

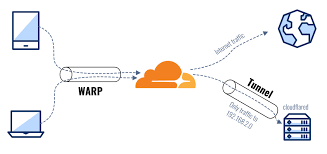

- Part 10: From the cloud to ground: Accept outside world traffics via Cloudflare tunnel

- Part 11: From the cloud to ground: CI/CD with git hub runners

- Part 12: Monitoring and debugging with Open Observe and lens

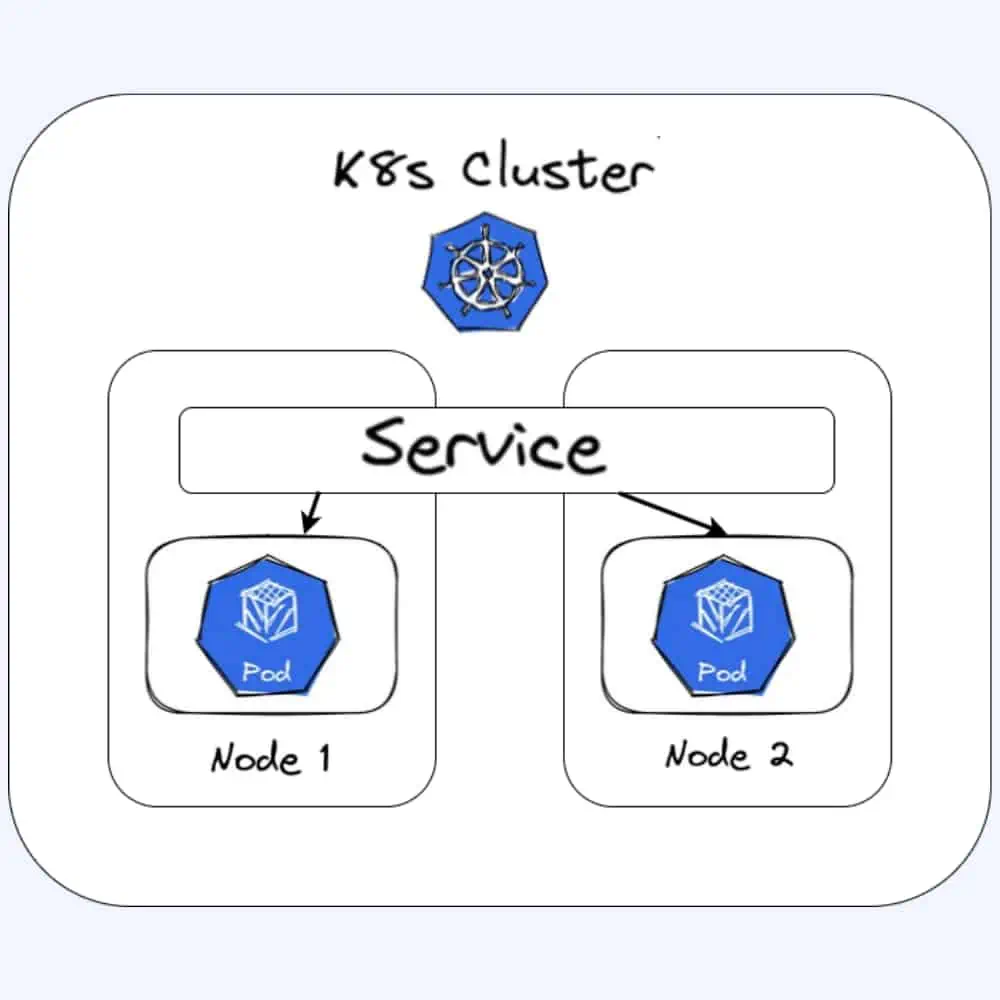

About the k8s network model

The Kubernetes network model specifies:

- Every pod gets its own IP address

- Containers within a pod share the pod IP address and can communicate freely with each other

- Pods can communicate with all other pods in the cluster using pod IP addresses

- Isolation (restricting what each pod can communicate with) is defined using network policies

As a result, pods can be treated much like VMs or hosts (they all have unique IP addresses), and the containers within pods can be treated like processes running within a VM or host (they run in the same network namespace and share an IP address). This model makes it easier for applications to be migrated from VMs and hosts to pods managed by Kubernetes. In addition, because isolation is defined using network policies rather than the structure of the network, the network remains simple to understand.

The k8s services

To expose a service in k8s to internet we use services. There are 3 types of services

The ClusterIp

ClusterIP is the default service type in Kubernetes, and it provides internal connectivity between different components of our application. Kubernetes assigns a virtual IP address to a ClusterIP service that can solely be accessed from within the cluster during its creation. This IP address is stable and doesn’t change even if the pods behind the service are rescheduled or replaced. ClusterIP services are an excellent choice for internal communication between different components of our application that don’t need to be exposed to the outside world. The service file for last blog hello-express example using ClusterIP

apiVersion: v1

kind: Service

metadata:

name: hello-express

namespace: hello-express

spec:

selector:

app: hello-express

type: ClusterIP

ports:

- protocol: TCP

port: 3000

targetPort: 3000The NodePort

NodePort services extend the functionality of ClusterIP services by enabling external connectivity to our application. When we create a NodePort service on any node within the cluster that meets the defined criteria, Kubernetes opens up a designated port in node machine that forwards traffic to the corresponding ClusterIP service running on the node. These services are ideal for applications that need to be accessible from outside the cluster, such as web applications or APIs. When we create a NodePort service, Kubernetes assigns a port number from a predefined range of 30000-32767. Additionally, we can specify a custom port number by adding the nodePort field to the service definition.The service file for last blog hello-express example using NodePort

apiVersion: v1

kind: Service

metadata:

name: hello-express

namespace: hello-express

spec:

selector:

app: hello-express

type: NodePort

ports:

- protocol: TCP

port: 3000

targetPort: 3000

nodePort: 30300The nodePort field is specified as 30080, which tells Kubernetes to expose the service on port 30080 on every node in the cluster.

The LoadBalancer

LoadBalancer services connect our applications externally, and production environments use them where high availability and scalability are critical. When we create a LoadBalancer service, Kubernetes provisions a load balancer in our cloud environment and forwards the traffic to the nodes running the service. LoadBalancer services are ideal for applications that need to handle high traffic volumes, such as web applications or APIs. With LoadBalancer services, we can access our application using a single IP address assigned to the load balancer.

After creating the LoadBalancer service, Kubernetes provisions a load balancer in the cloud environment with a public IP address. We can use this IP address to access our application from outside the cluster.

Example of our last blog with LoadBalancer Services

apiVersion: v1

kind: Service

metadata:

name: hello-express

namespace: hello-express

spec:

selector:

app: hello-express

type: LoadBalancer

ports:

- protocol: TCP

port: 3000

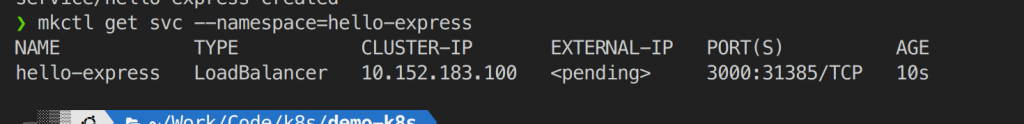

targetPort: 3000Note: Loadbalancer services is installed by default on some k8s on the cloud services for examples: amazon web service, google cloud platform,… But in our local microk8s no LoadBalancer services installed yet that why when you create the service the loadbalancer ip is pending forever

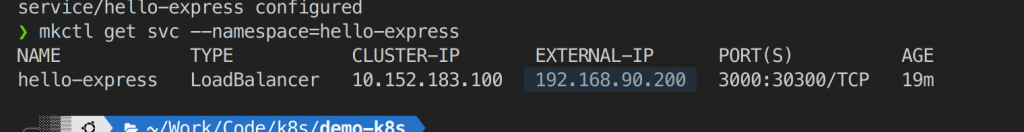

To config a loadbalancer we need to config a IP pool (which a ip range of public available ip that k8s can choose one from them to use as a loadbalancer IP), A loadbalancer service (too chose which pod is best to use at a time ) you can use the Haproxy or Nginx. With our beloved Microk8s we have a plugin called MetalLB. You can follow this docs to setup a loadbalancer service then expose your application using loadbalancer service: https://microk8s.io/docs/addon-metallb. When install metalb it will ask you to specific the ip pool base on your network configuration you can chose an ip range that met eg in my local network i choosed 192.168.90.200-192.168.90.205. Then apply the loadbalanncer service file and you can see the load balancer ip that k8s will assign to your service

Try to visit this ip with port 3000 you will see our old hello-express app running nice right :v

It can be use with ingress to create a full ingress-loadbalancer services. What is ingress we discover it now:

Ingress

Actually ingress is not k8s services. It is installed in k8s. It have a deployment name ingress-controller which live in k8s cluster (that mean this deployment can access to all internal cluster ip of this k8s cluster). And this ingress-controller deployment is expose via nodeport. Now you can see that ingress work like a bridge between outside network and your k8s internal network

Ingress will map a domain name to a service in k8s via cluster ip service (it forward the trafic to the designed cluster ip). It like nginx reverse proxy (actually the underlying layer of ingress is the nginx web server). Remember in Part 1: My first time deploy a web to AWS cloud service. I setup a nginx web server to revesed proxy to multiple application in a server base on the domain name eg api.example.com to localhost:3000 and fe.example.com to localhost:3001. The ingress will work same as this base on the domain it reverse to specific internal cluster ip of a service. Or if we don’t use domain we can mapping via the host machine IP port and path in our example it will be localhost. For example i will setup http://localhost to our hello-express service. In real world application we should expose via domain.

First, enable ingress in microk8s simple type: microk8s enable ingress. Create a file name ingress.yaml then copy this code. Make sure your service is clusterIp service

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-express

namespace: hello-express

annotations:

kubernetes.io/ingress.class: public

spec:

rules:

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: hello-express

port:

number: 3000Now kubectl apply -f ingress.yaml and visit http://localhost you will see your hello-express in last blog. If you need to config to expose via a domain here’s how you can do it

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-express

namespace: hello-express

annotations:

kubernetes.io/ingress.class: public

spec:

rules:

- host: "your-domain"

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: hello-express

port:

number: 3000Then point your domain to your k8s cluster ip then you can see your app via domain (we can not demo it now because we’re at localhost but you can try it by setup a aws lightsail Ubuntu server install microk8s then purchase a domain point it to your lightsail instance’s ip v4)

CONCLUSION

Ok. i hope at this point k8s networking is not too dark for you guys anymore. Just to remember some keywords:

- ClusterIP service is internal in k8s cluster and accessible in k8s cluster only and it is default.

- NodePort expose to host ip with a specific port. Load balancer service is best for production but need to setup more and need to allocate an ip pool which you need to manage it well (to avoid other service use the IP in pool).

- Ingress is the best use case for expose a web which work like a bridge between internet and inside k8s inside network and we can expose our service via url just like nginx reverse proxy

In most of time i use ingress with ClusterIp to expose my service because my job is usually to deploy a web application

So in short here’s some usecase for each service

- Cluster Ip for internal communications between k8s cluster only

- NodePort for expose not http because it’s simple and we don’t care about domain

- Loadbalancer is a good solution to expose an http web but it need to manage ip addresses very complicate when you need to deploy a lot of web

- Ingress best solution for exposing an web app. Support domain reverse proxy, don’t need to care about public ip (just point domain to k8s node ip)

Wow another long blog with a lot of knowledge. K8s is hard right but it’s very powerfull and help us a lot. When understand all undelying concepts you will not confuse about k8s anymore. Good luck with your yaml files <3

This blog is last blog in this series we talk about theory. From next part it my experiences my stories when i start to install my local cloud service and for other companies also (what i mentioned from ground to cloud). So no more boring concepts just yaml files :))))). Let’s go Part 6: From the cloud to ground: Physical server setup for a private cloud