Move on to the next series of DevOps series “from cloud to ground”. We now discover a brand new tool called docker. First time I read about Docker, it is very complicated with a lot of concepts and tools that come along with it. But for the sake of simplicity I just cover some basic concepts and steps that you can start right away with docker. More advanced concepts I’ll leave a name with a link so you can read it more or i will write a series about docker later :v

Table contents

- Part 1: My first time deploy a web to a aws cloud service

- Part 2: Docker and containerization

- Part 3: K8s and the new world of container orchestration

- Part 4: Deploy your express application to k8s

- Part 5: Networking with K8s is f***ing hard

- Part 6: From the cloud to ground: Physical server setup for a private cloud

- Part 7: From the cloud to ground: Install Ubuntu Server and Microk8s

- Part 8: From the cloud to ground: Harvester HCI for real world projects

- Part 9: From the cloud to ground: Private images registry for our private cloud

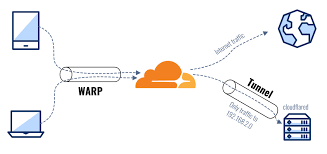

- Part 10: From the cloud to ground: Accept outside world traffics via Cloudflare tunnel

- Part 11: From the cloud to ground: CI/CD with git hub runners

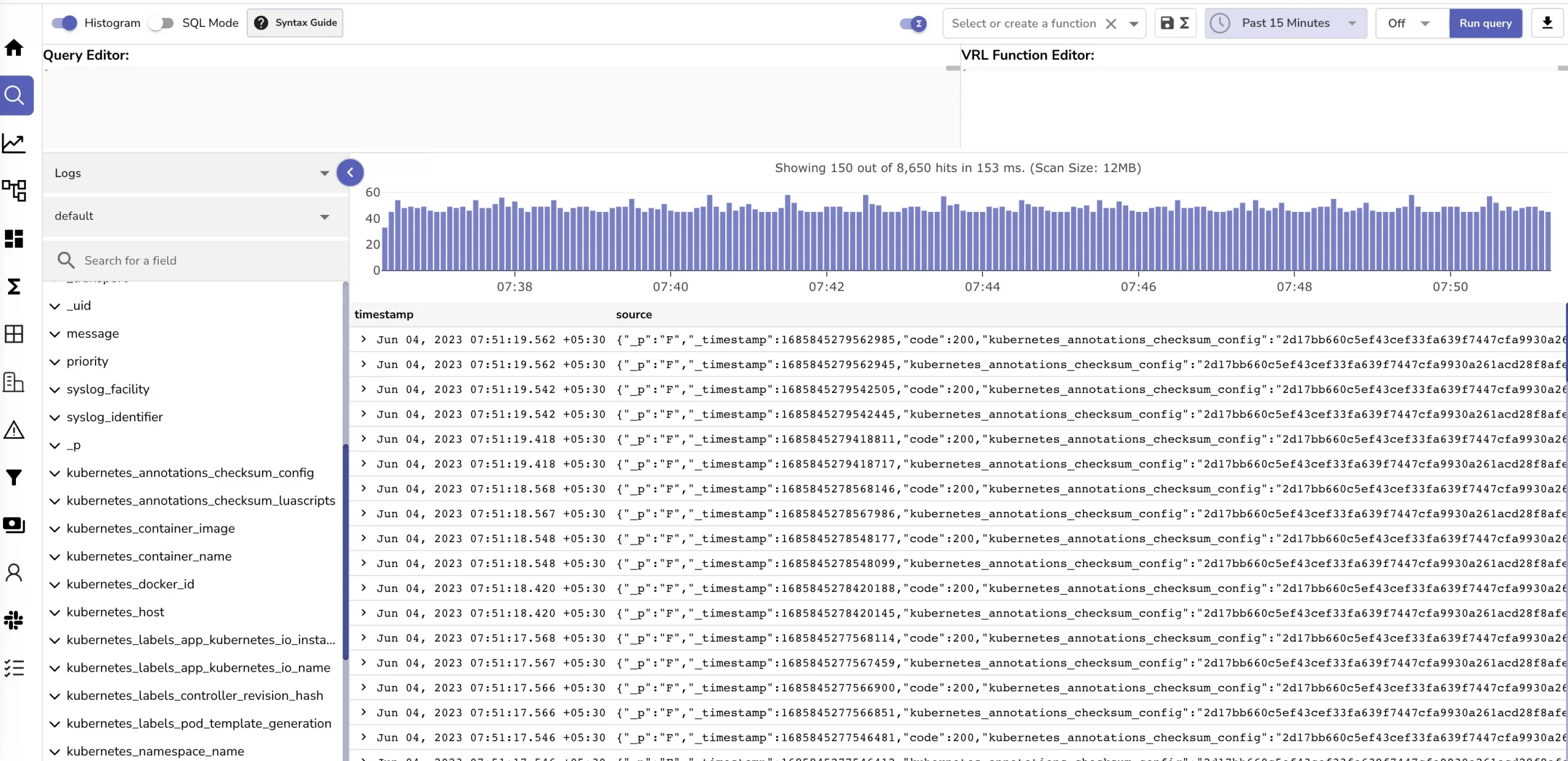

- Part 12: Monitoring and debugging with Open Observe and lens

1. It works on machine problem

As a developer I’m sure that you’ve heard or used this sentence: “But it works on my machine!”. Yes it works on yours but not mine :)) So why ?. Of course the environment’s problems. Each machine has its own OS, installed its own development environment’s eg: node js, java, libraries or database,… same source code can work on a machine but not all. So the containerization process comes to solve this problem.

Imagine you put all things you need to run an application into a bag which includes OS, source code, environments (nodejs, database, networking…) then give this bag to anyone so they can run the same thing as you. That is how containerization works. In other word, containerization is a technology that packages software code along with all its dependencies into a single container. This process ensures that the application runs reliably and consistently across different computing environments. By isolating applications into separate containers, developers can streamline deployment, scaling, and management tasks, making the process more efficient and reducing compatibility issues across platforms

2. Docker basics concepts

Docker is a container runtime. You can think of it as an implementation of the containerization process belonging with docker. There are also other container runtimes like: containerd, CRI-O, Podman,… But i use docker so let’s get started with docker

First, some concepts and notes. Docker or other container runtime have 2 main concepts:

- Image: is a blueprint for create container it usually includes a lot of layer each layer is an instruction tell docker how to build a container for example:

FROM node:17-alpine

RUN npm install -g nodemon

#Change directory

WORKDIR /app

# cache node_modules

COPY package.json .

# run command to install packages

RUN npm install

# copy my source code

COPY . .

# set port this is optional

EXPOSE 4000

# this commad will be executed when container is created and run

CMD ["npm", "run", "dev"]This is how we can define a docker image. Each line is a layer more about syntax will not be discussed you can read more at: https://docs.docker.com/. But you can see first we need a start point for our image (base image) which in this example is a node:17 runtime (which is linux os have nodejs installed). Then we run some commands to install nodemon, install packages, copy source code and start the application via npm run dev. So how you setup your local development then you can describe in image like this.

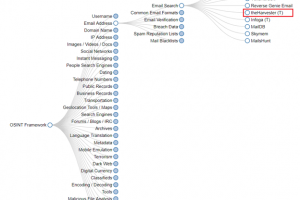

We can create our custom image or others’ developed images from the image registry (like using other code from github). There are 2 types of image registry: public registry and private registry. Public registry like docker hub you can find and use some popular images on docker hub eg: Ubuntu, node, java,… You can also upload / share your image by docker push command

- Container: is the process or the running instance of an image. When image created successfully it is saved in your local machine. Then when you want to run it it create a running container of this image. This container is isolated with your host machine it have it own separate network, file systems, OS, process,… (you can think as it is a VM running on your machine)

3. Install docker on Ubuntu

- Docker now has docker desktop a GUI to work with docker you can try

- Install on Linux machine: https://docs.docker.com/engine/install/ubuntu/ just goes here (we can use Install using the convenience script)

- Check if installed success

docker version4. Build and run your first image

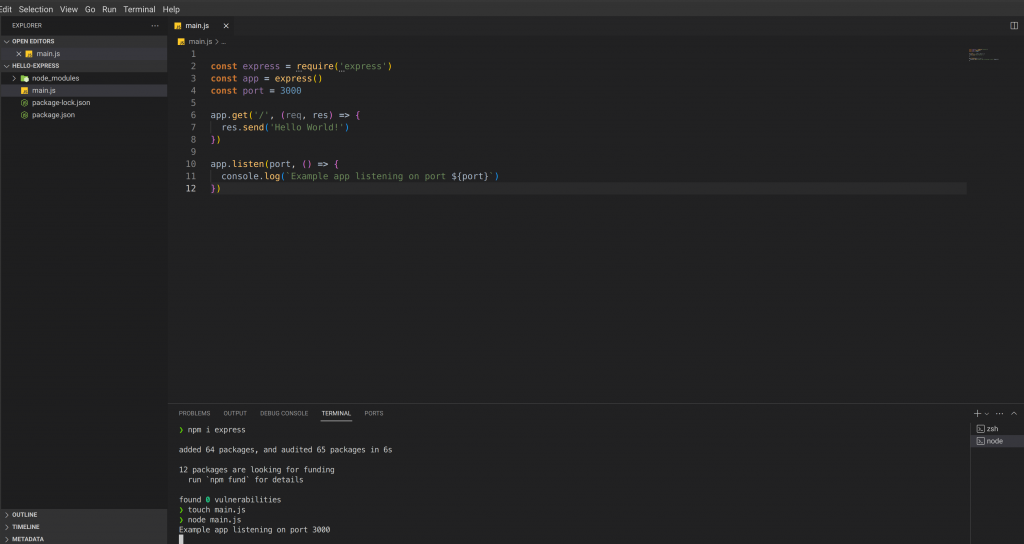

- Let create a docker image for a simple hello world nodejs Express web server

- First create a folder name hello-express then cd hello-express then npm init –y

- Then npm i express create a file name main.js then paste this code:

const express = require('express')

const app = express()

const port = 3000

app.get('/', (req, res) => {

res.send('Hello World!')

})

app.listen(port, () => {

console.log(`Example app listening on port ${port}`)

})

- Now if you run node main.js you will get a server running on localhost:3000

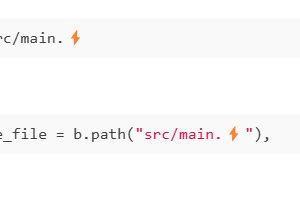

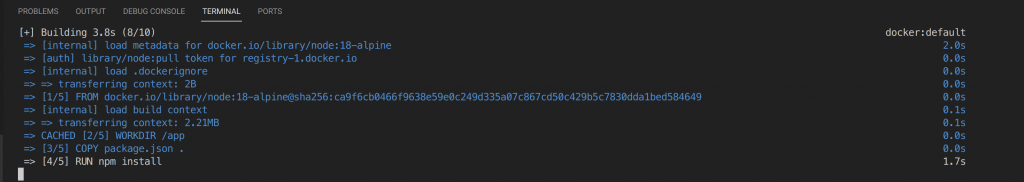

- To create a docker image in the project folder we create a file named Dockerfile and start define our layers for our image

FROM node:18-alpine

# Create app directory

WORKDIR /app

# Install app dependencies

COPY package.json .

RUN npm install

# Bundle app source

COPY . .

EXPOSE 3000

# Run the app

CMD [ "node", "main.js" ]- To build our image in terminal type

sudo docker build -t hello-express .This will tell docker to build an image with name is hello-express and the docker file is at . mean at this folder

- But at this point if you locate localhost:3000 nothing shows up. Why ?. As I mentioned before, all things in containers are separated from your local machine, even the network. Your express is running inside an internal network of containers so you can not access it outside from the host machine. To do it we need some more steps. But first just stop the container we just run

- To stop this docker just type docker stop container id or container name. docker stop vigilant_hermann. This name is auto assigned by docker you can named container by add –name= in docker run command

- Port mapping: to map an internal container port to host machine port we use -p flag -p host_port:container_port. sudo docker run hello-express -p 3000:3000. Now if you open localhost:3000 on your machine you can see the hello message from express.

- So as i said before even the file system is isolated so if your express app has uploads/ folders to save user upload files it will live in a container not in your host machine and when the container is shut down all this file disappears. So how can we solve this ? By using the volume mapping which will map a folder in container with a folder in host machine. sudo docker run hello-express -p 3000:3000 -v /home/uload:/app/uploads

- There are a lot more options of docker run but if each time you run a container you need to write this fuckin long command this may make you sick. So let’s see how we can deal with this problem

5. The docker compose

- Docker compose is a docker configuration file which defines all services, all mappings,.. you need to run your application. Create a file name docker-compose.yaml then paste this

# docker-compose.yaml

version: '3.8'

services:

api:

build: .

container_name: hello-express

ports:

- '3000:3000'

volumes:

- ./upload:/app/upload- Here we tell docker to build an image at same folder and name the container as hello-express and when run it mapping the port 3000 and also the volumes

- Type

docker compose upto run this container with your config. Now every thing is up and running as you wish.

6 Some extra concepts

- Docker has a lot of other extra concepts that I can not cover in this blog for example: how layers are cached so the image creation time gets faster after first build time. How the container process is mapping to the host machine process. The docker daemon The docker Rest API and the docker cli,… I highly recommend to read more at docker official docs: https://docs.docker.com/

Conclusion, Docker is a tool that you must use to make sure your app can be deployed at any machine. It may be hard at first glance but when you get used to its concepts things start to make sense. One note that you should always keep in mind: “Container is an isolated machine from your host machine”. It runs on your machine but it does not share the same things as you don’t tell it to share. This is important because when we move to K8s. This mindset will help us a lot.

Containerization is a basic concept that every devops must know but not enough in real world applications you may need to scale your instances of your applications so it can handle large requests or reduce the down time. This will be solved by the container orchestration. Let’s see what it is in the next part of this series: Part 3: K8s and the new world of container orchestration. Thanks for reading and see you soon

__Coding Cat__