Take an example you’re building an application which allow user to post beautiful girls pictures. Sound good right ? Ok so maybe the first question appears in your mind is that “Where should i save all the images files ?”. There are a lot of options to choose: save files directly in backend server, use firestore, aws S3 buckets… Today, let’s talk about MinIO an file storage management that stores file as buckets and objects like aws S3 buckets but it’s free and open source :D. I’m poor so all my solutions is free :vvvv

1. What’s MinIO

- MiniIO is a lightweight data input/output library crafted for efficient and fast data handling across different formats and sources. It optimizes resource usage, making it an ideal choice for developers aiming to enhance data management in their applications while keeping things nimble. I’ll write another blog to guide you install your own minio server. Now let’s use this playground minio: play.min.io

- One thing we should keep in mind, all files in MiniO is consider as an objects and objects is stores in a buckets. (you can compare they as files and folders)

2. Minio nodejs sdk

First, go the play.min.io to create your buckets or go to your minio server and create a bucket and get the access and private key from minio admin dashboard page too.

https://www.npmjs.com/package/minio

npm install minio

create minio client

var Minio = require('minio')

var minioClient = new Minio.Client({

endPoint: 'play.min.io',

port: 9000,

useSSL: true,

accessKey: 'Q3AM3UQ867SPQQA43P2F',

secretKey: 'zuf+tfteSlswRu7BJ86wekitnifILbZam1KYY3TG'

});then you can use the client to upload object to minio by fput function

// Put a file in bucket my-bucketname with content-type detected automatically.

// In this case it is `text/plain`.

var file = 'my-testfile.txt'

s3Client.fPutObject('my-bucketname', 'my-objectname', file, function (e) {

if (e) {

return console.log(e)

}

console.log('Success')

})Or download the file from mino server to your local machine using fget function

s3Client.fGetObject('my-bucketname', 'my-objectname', '/tmp/objfile', function (e) {

if (e) {

return console.log(e)

}

console.log('done')

})This method requires to save files in a backend server. if you want user to upload directly file to minio we can use presigned url. The flow is create a presigned url for an object. Imagine this step is like create an empty bag then give the bag to user. User then put the file in the bag by calling this url with PUT method with the binary file in body

var presignedUrl = s3Client.presignedPutObject('my-bucketname', 'my-objectname', 1000, function (e, presignedUrl) {

if (e) return console.log(e)

console.log(presignedUrl)

})Then to get the file we can use presignedGetObject to get the object preview url

var presignedUrl = s3Client.presignedGetObject('my-bucketname', 'my-objectname', 1000, function (e, presignedUrl) {

if (e) return console.log(e)

console.log(presignedUrl)

})Ok, at this point we can design an upload flow for our app like this. User call an api to get presigned url with the object name in the body then use the url return from this api to upload file to it. then user can call another api which pass the objectname to get preview url then uss the preview url as an image url to save it in our database. It make sens but we must notice one things: ALL PRESIGNED URL EXPIRES AFTER 7 DAYS. so we can not save the preview presigned url to database cause it will expired.

==> A workaround is that we save only objectname in database then eactime user fetch data from api we take the object name from databse call the presignedGetObject() to get presigned url then return for user. That works but not too good.

3. Minio bucket policy

- To solve this problem, we should public the objects in bucket so anyone can see it, no need through an presigned url.

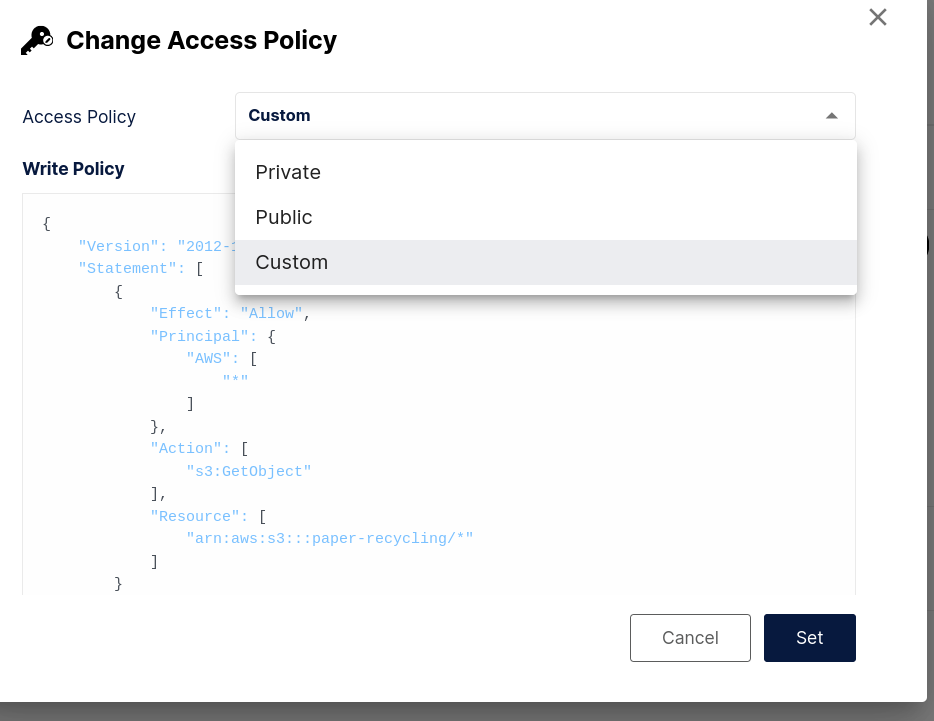

Here’s chose your buckets to public. One the bucket is public we can preview all objects in the buckets with this url pattern:

https://{minio-host}:{mino-port}/{bucket_name}/{object_name}We then can take this url to save in database. But when we set policy to public anyone can acess your buckets and do bad things we only want to allow user to read the buckets object so we need to add the custom policy for our bucket.

In bucket policy setting chose custom then paste this policies object

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": [

"*"

]

},

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::your-bucket-name/*"

]

}

]

}Remember to change “your-bucket-name” with your own bucket name. Ok now we have allow only getObject action in our bucket to be public for everyone.

4. Create upload api

- we then now can use this new flow for our upload api. user call an upload api ex /api/v1/upload with the file in the body. backend server get the file then put it to minio with the fput method. Then return the public object url by this pattern: https://{minio-host}:{mino-port}/{bucket_name}/{object_name}

- Here is some demo code using express with multer middleware for file upload

- Sample service class as a wrapper to use minio client

// minio service

class MinioService {

private readonly minioClient: Client

private readonly expiry = 60 * 60 * 24 * 7 // 7 days

constructor() {

this.minioClient = new Client({

endPoint: MINIO_CLIENT,

port: MINIO_PORT,

useSSL: false,

accessKey: MINIO_ACCESS_KEY,

secretKey: MINIO_PRIVATE_KEY

});

}

async getPresignedUrl(objectName) {

return new Promise((resolve, reject) => {

this.minioClient.presignedPutObject(MINIO_BUCKET_NAME, objectName, this.expiry, function (e, presignedUrl) {

if (e) {

console.log(e)

reject(e)

}

resolve(presignedUrl)

})

})

}

async getDownloadUrl(objectName) {

return new Promise((resolve, reject) => {

this.minioClient.presignedGetObject(MINIO_BUCKET_NAME, objectName, this.expiry, function (e, presignedUrl) {

if (e) {

reject(e)

}

resolve(presignedUrl)

})

})

}

async putObject(file: any) {

return new Promise((resolve, reject) => {

this.minioClient.fPutObject(MINIO_BUCKET_NAME, file.filename, file.path, {

'Content-Type': file.mimetype,

'Content-Length': file.size,

}, function (e) {

if (e) {

reject(e)

}

if (+MINIO_PORT === 80) {

const objectUrl = `https://${MINIO_CLIENT}/${MINIO_BUCKET_NAME}/${file.filename}`

resolve(objectUrl);

}

const objectUrl = `http://${MINIO_CLIENT}:${MINIO_PORT}/${MINIO_BUCKET_NAME}/${file.filename}`

resolve(objectUrl);

})

})

}

}

export default MinioService- A sample controller for upload file using the service class below

async uploadFile(req: Request, res: Response) {

try {

await uploadFileMiddleware(req, res);

const file = req.file;

validateImage(file);

const url = await this.minioService.putObject(file);

//remove file in server

fs.unlinkSync(file.path);

return res.status(200).json({

message: 'Upload file success',

data: url

});

} catch (err: any) {

console.log(err)

return res.status(500).json({

message: 'Upload file failed' + err.message

})

}

}3. Conclusion

That how we can upload file to minio, this blog i don’t provide the detail code i just explain the implement logic base on your project’s setup you can do it your way. Good luck.

:”< idol